We are seeing a huge increase in the use of Natural Language Processing techniques, but there is still even a bigger potential that will be harnessed in the upcoming months and years. As Sebastian Ruder has said we are living NLP’s ImageNet moment. It seems that new and better Language Models are coming from research teams every week from the deep learning community.

As Machine Learning & Artificial Intelligence practitioners our jobs is to create real world applications considering both latest advances and practical implementation issues to solve business needs. Maybe we will not use the latest, biggest, state-of-the-art model as soon as it’s out on Github or Tensorflow Hub but rather use some other easier to implement, faster and/or lighter model until using a more complex model makes sense. Saying this, it’s helpful to have a method that enables us faster and easier evaluation of models for our specific business tasks. Also some results can be considered as general guidelines on the performance of the models hoping those can be generalized to other tasks.

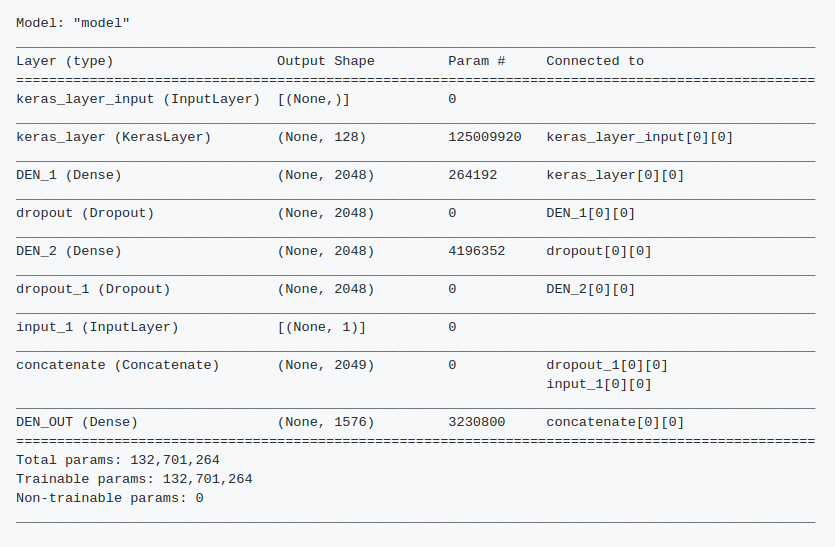

I’ve been working on such a method and I’ll like to share what I’ve found from comparing several models based on the Neural-Network Language Model made available by Google at Tensorflow Hub focusing on Spanish. There are fewer resources for Spanish than other languages so I hope this may help. Most important results I found:

- NNLM models trained in Spanish have a better performance than those trained in English.

- Normalized models have better performance than non-normalized models.

- In general 50 and 128 dimensions model performed similarly although it seems that hyperparameter optimization may improve both models, specially the later.

Please look at this Github repository for details and full results. I hope to be able to add more results with other models.